|

|

| ActiveWin: Reviews | Active Network | New Reviews | Old Reviews | Interviews |Mailing List | Forums |

|

|

|

|

|

DirectX |

|

ActiveMac |

|

Downloads |

|

Forums |

|

Interviews |

|

News |

|

MS Games & Hardware |

|

Reviews |

|

Support Center |

|

Windows 2000 |

|

Windows Me |

|

Windows Server 2003 |

|

Windows Vista |

|

Windows XP |

|

|

|

|

|

|

|

News Centers |

|

Windows/Microsoft |

|

DVD |

|

Apple/Mac |

|

Xbox |

|

News Search |

|

|

|

|

|

|

|

ActiveXBox |

|

Xbox News |

|

Box Shots |

|

Inside The Xbox |

|

Released Titles |

|

Announced Titles |

|

Screenshots/Videos |

|

History Of The Xbox |

|

Links |

|

Forum |

|

FAQ |

|

|

|

|

|

|

|

Windows XP |

|

Introduction |

|

System Requirements |

|

Home Features |

|

Pro Features |

|

Upgrade Checklists |

|

History |

|

FAQ |

|

Links |

|

TopTechTips |

|

|

|

|

|

|

|

FAQ's |

|

Windows Vista |

|

Windows 98/98 SE |

|

Windows 2000 |

|

Windows Me |

|

Windows Server 2002 |

|

Windows "Whistler" XP |

|

Windows CE |

|

Internet Explorer 6 |

|

Internet Explorer 5 |

|

Xbox |

|

Xbox 360 |

|

DirectX |

|

DVD's |

|

|

|

|

|

|

|

TopTechTips |

|

Registry Tips |

|

Windows 95/98 |

|

Windows 2000 |

|

Internet Explorer 5 |

|

Program Tips |

|

Easter Eggs |

|

Hardware |

|

DVD |

|

|

|

|

|

|

|

ActiveDVD |

|

DVD News |

|

DVD Forum |

|

Glossary |

|

Tips |

|

Articles |

|

Reviews |

|

News Archive |

|

Links |

|

Drivers |

|

|

|

|

|

|

|

Latest Reviews |

|

Xbox/Games |

|

Fallout 3 |

|

|

|

Applications |

|

Windows Server 2008 R2 |

|

Windows 7 |

|

|

|

Hardware |

|

iPod Touch 32GB |

|

|

|

|

|

|

|

Latest Interviews |

|

Steve Ballmer |

|

Jim Allchin |

|

|

|

|

|

|

|

Site News/Info |

|

About This Site |

|

Affiliates |

|

Contact Us |

|

Default Home Page |

|

Link To Us |

|

Links |

|

News Archive |

|

Site Search |

|

Awards |

|

|

|

|

|

|

|

Credits |

|

Product:

3D Prophet III |

nVidia GeForce 3 aka NV 20

|

Table Of Contents |

Founded

in 1993, NVIDIA has delivered during the last past five years (since they

release their first 3D card in 1995), various 3D graphic cards that were

all more powerful than the others. Since the introduction of the TNT

graphic chip in 1998, NVIDIA became the undeniable worldwide 3D chipmaker

giant: they buried all their competitors and even bought back recently

3DFX that was the last survivor capable to offer a viable alternative to

NVIDIA’s supremacy. Anyway they didn’t get the king of the hill 3D

chipmaker status easily. Indeed it’s been years now that gamers have had

eyes only for NVIDIA GPUs known for their amazing power and the quality of

their drivers since when you buy a graphics card; drivers are the most

important thing after the engine to get the most out of your purchase.

When NVIDIA introduced the first GeForce, the GeForce 256, it gave it the

sweet designation of GPU where GPU stands for Graphics Processing

Unit. Indeed they were definitely right naming their graphic chips

that way, since the GeForce 3 contains 57 million transistors against only

42 million for the Pentium 4 processor! This statement reveals itself the

power you can expect from the GeForce 3. After months of long waiting, and

a lot of crazy rumors, the GeForce 3 is at last out for the greatest

pleasure of gamers. The GeForce 3 is NVIDIA’s chance to demonstrate new

technologies to beat ATI and its Radeon line of cards, affirming once

again its unrivalled supremacy. Below are the features of what we can call

the beast:

Founded

in 1993, NVIDIA has delivered during the last past five years (since they

release their first 3D card in 1995), various 3D graphic cards that were

all more powerful than the others. Since the introduction of the TNT

graphic chip in 1998, NVIDIA became the undeniable worldwide 3D chipmaker

giant: they buried all their competitors and even bought back recently

3DFX that was the last survivor capable to offer a viable alternative to

NVIDIA’s supremacy. Anyway they didn’t get the king of the hill 3D

chipmaker status easily. Indeed it’s been years now that gamers have had

eyes only for NVIDIA GPUs known for their amazing power and the quality of

their drivers since when you buy a graphics card; drivers are the most

important thing after the engine to get the most out of your purchase.

When NVIDIA introduced the first GeForce, the GeForce 256, it gave it the

sweet designation of GPU where GPU stands for Graphics Processing

Unit. Indeed they were definitely right naming their graphic chips

that way, since the GeForce 3 contains 57 million transistors against only

42 million for the Pentium 4 processor! This statement reveals itself the

power you can expect from the GeForce 3. After months of long waiting, and

a lot of crazy rumors, the GeForce 3 is at last out for the greatest

pleasure of gamers. The GeForce 3 is NVIDIA’s chance to demonstrate new

technologies to beat ATI and its Radeon line of cards, affirming once

again its unrivalled supremacy. Below are the features of what we can call

the beast:

- 256 bit GPU engraved in 0.15µ

- 4 pixel pipelines

- 2 simultaneous textures by pixel

- 4 active textures max per pixel per pass

- 36 simultaneous Pixel shading operations per pass

- 128 Vertex instructions per pass

- GPU clocked at 200 MHz

- DDR clocked at 230 MHz

- 64 MB of onboard DDR memory using a 128-bit interface

- 7.36 GB per second memory bandwidth

- DVD Motion Compensation technology

If these numbers aren’t really impressive especially when you know they come from NVIDIA, it’s only because NVIDIA engineers focused their efforts to develop new technologies inside the chip like the Light Speed Memory architecture to optimize the bandwidth as well as, ultimate refinement, a brand new graphic engine called nFinite FX we’ll review in detail.

Light Speed Memory Architecture

As said before compared to the GeForce II Ultra, the GeForce 3 chip’s specifications don’t vary a lot and most of you have already noticed that the fillrate of the GeForce 3 is inferior to the one of the GeForce II Ultra (1GB/s). If typically the fillrates announced by 3D chipmakers are never reached, it’s not the case anymore thanks to the new architecture that GeForce 3 carries. With the GeForce 3 most of the changes are under the hood! The new Light Speed Memory Architecture is aimed to optimize the memory’s bandwidth for a better and more realistic gaming experience. This new architecture includes three new unique technologies responding to the sweet names of ‘Z-Occlusion’, ‘Lossless Z Compression’, ‘Z-Buffer CrossBar’.

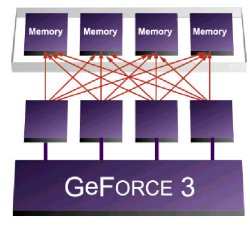

CrossBar

The GeForce 3 GPU comes with a new memory controller called CrossBar whose main task is to widely compensate the slow fillrate of the chip by avoiding bit wasting, reducing way latency times, and ensuring it beats the GeForce II Ultra 99% of the time. Traditionally a GPU uses a 256-bit memory controller that can transfer data only in 256-bits. So if a triangle is only one pixel in size it requires a memory access of 32 bytes when only 8 bytes are in fact required: more than 75% of the memory bandwidth is wasted with this process! That’s why NVIDIA intelligently solved the problem by implementing the new CrossBar controller. Unlike yesterday’s GPU, the CrossBar controller has four independent wide memory sub-controllers that can treat 64 bit blocks per clock for a global total of 256 bits (it can also group data to treat them entirely in 256 bits). This new memory controller is the key for better memory management in order to answer today’s game developers’ needs: complexity of 3D scenes (the number of triangles per frame has widely increased in recent games). Comparing to a traditional memory controller, the CrossBar cuts the average latency down to 25%. That way any 3D applications can take benefit of this marvel of technology. According to NVIDIA, the CrossBar controller can speed up memory access up to four times: it’s obviously the case if data that are about to be written or read make only 64 bits: but hopefully this situation is far from being an everyday occurrence.

GeForce 3 CrossBar Controller

Z-Occlusion Culling

I’m pretty sure you’re wondering what the hell is Z-Occlusion Culling? Well the fact is that the name of this new technology isn’t clear at all. Behind this complex name lies a very simple idea to boost 3D performance. Just like old PowerVR chips from NEC or the recent Kyro 2, the Z-Occlusion Culling technology featured by the new Light Speed Memory Architecture of the GeForce 3 is in fact an HSR (hardware surface removal). Everyone knows that when a 3D scene is rendered by the GPU, all the pixels are calculated even those who’d be hidden behind an earlier rendered pixel (for a reason or another) before the scene is finally displayed. The purpose of Z-Occlusion Culling is to not calculate the pixels that’d be hidden so they won’t be processed by the pixel shader, saving 50% of the bandwidth with actual games. Anyway to get the best result with Z occlusion culling the 3D application should ideally sort its scene’s objects before they are sent to the 3D chip.

Lossless Z Compression

This new compression process concerns the Z parameter of a pixel (where Z stands for depth of the pixel in a 3D scene). Usually when a scene is displayed, the Z value (coded in 16, 24 or 32 bits) determines if a pixel should be visible or not. The more the games are beautiful and realistic the more the depth values are numerous, obstructing the memory. Just like in ATI Radeon chips, the GeForce 3 Lossless Z Compression reduces the amount of required z-buffer bandwidth by compressing the information flux, with a factor of 4:1. If NVIDIA doesn’t detail the algorithm used by the Lossless Z compression, it can in theory reduce the z-buffer memory accesses by 75%. Obviously the compression is not destructive and doesn’t alter the way scenes are displayed.